The Vibe Code Backlash Is Real, And I'm Somewhere in the Middle

Recently the r/selfhosted moderation team dropped an announcement that sent the community into a frenzy: starting now, AI-assisted and "vibe-coded" projects can only be posted on Fridays. They're calling it "Vibe Code Friday."

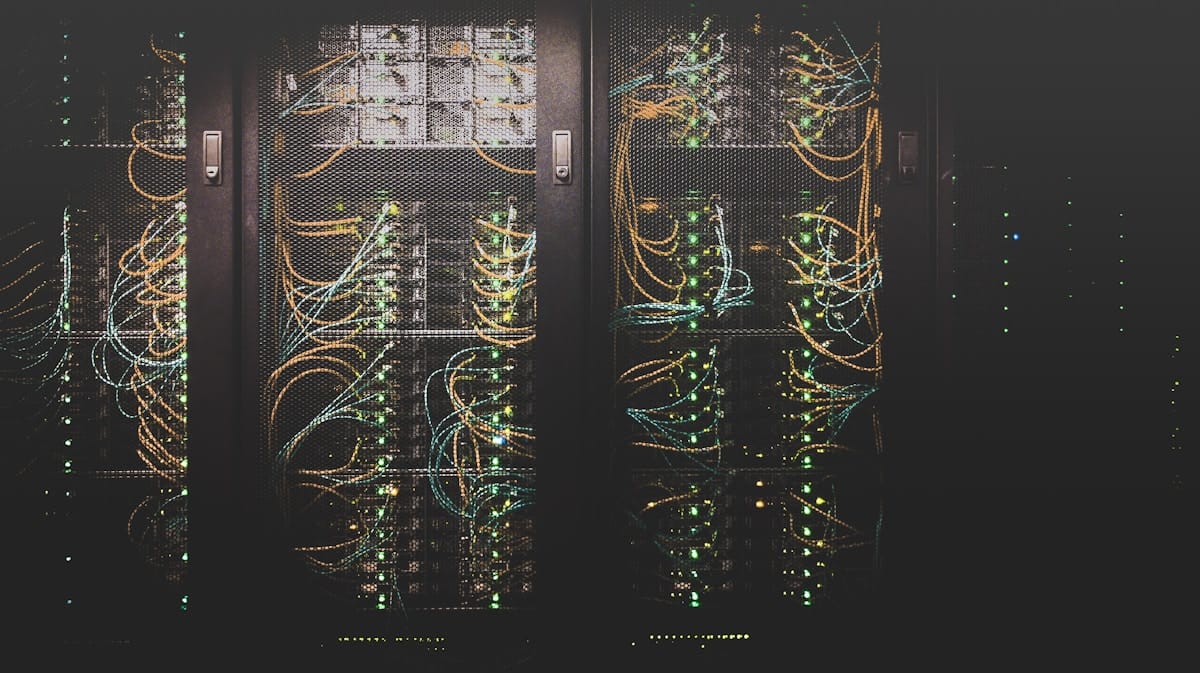

The response was predictably chaotic. Half the community is cheering, the other half calling it gatekeeping. I run 100+ Docker containers across four servers, I've built two SaaS products (Pews and Mutiny), and I integrate identity platforms for a Fortune 500. So I think I've got some perspective here.

🤔 What Is "Vibe Coding" Anyway?

"Vibe coding" became the phrase du jour in early 2025. The idea is simple. You describe what you want to an AI, it writes the code, you deploy it, and you call it shipped. No deep understanding required. You're "surfing the vibe" of the AI's output.

It can be a genuine productivity multiplier. It can also be a ticking time bomb running in your homelab, serving real traffic, with nobody understanding what's underneath.

The r/selfhosted mods specifically called out projects that are:

- Younger than a month old

- Single collaborator (you + an AI persona doesn't count)

- With "obvious signs of vibe-coding"

They're not wrong. The subreddit has been flooded with these. But the knee-jerk reaction misses a more nuanced reality.

🏗️ My Actual Stack vs. What AI Helps With

I run:

- 6-node Proxmox cluster with MicroK8s

- SENAITE LIMS deployments on AWS Lightsail (Docker, Traefik, Terraform, Ansible)

- Pews (church management SaaS) and Mutiny (team communication platform)

- Fine-tuned 20B MLX models running locally on Apple Silicon

None of that was "vibe coded." Every piece cost me real debugging sessions. SENAITE taught me that container recreation wipes ZODB. Traefik taught me that static configs and dynamic configs are not the same thing, and you will hate yourself if you mix them up at 11 PM.

But AI coding tools were part of building all of it. The difference is that I understand what the code does. I've debugged it. I've watched it fail and traced through logs without AI hand-holding, because the model doesn't know my specific environment.

⚖️ The Real Line

The problem isn't AI assistance. It's skipping the understanding layer.

There's a spectrum. On one end you have "vibe coding," where you describe an app and ship it without reading the output. On the other end you have AI-assisted engineering, where you use AI to go faster on things you already understand. The first is dangerous in production. The second is just modern development.

When I use Claude to scaffold a Ghost API integration, I read the output. I understand why it's generating a JWT with HS256. I know what aud: '/admin/' means. If it breaks at 3 AM, I can fix it without the AI. That's the line.

🖥️ The Homelab Stakes Are Different

What the vibe code debate gets wrong when applied to homelab is that the stakes vary wildly.

A weekend project that generates memes? Vibe code away. But if you're running:

- DNS for your entire home network

- LIMS for an actual laboratory with paying clients

- Identity infrastructure tied to production SSO

- Monitoring and alerting for your homestead on six acres

...you need to understand what's running. Not because AI is bad, but because you're the on-call engineer for your own infrastructure. When my SENAITE instance was serving stale data, no AI diagnosed it from the outside. I traced it to a ZODB transaction isolation issue over two hours. You need to own your stack.

🔧 How I Actually Use AI in Production Contexts

Some examples from my own workflow:

Good use, boilerplate and scaffolding:

# AI generated this Terraform module skeleton

# I then read every line, understood the resource graph,

# and added the state locking config it missed

module "lightsail_lims" {

source = "./modules/lightsail"

instance_name = var.lims_instance_name

availability_zone = var.az

blueprint_id = "ubuntu_22_04"

bundle_id = "medium_2_0"

}Good use, debugging assistance:

Paste the logs, ask "what's happening here," get a hypothesis, then verify it yourself. The AI gives you direction. You do the confirmation.

Bad use, deploying without reading:

I've seen this destroy homelab setups. AI writes a Docker Compose file, someone deploys it, then discovers six months later it's been running with --privileged and no resource limits on a container with a CVE.

Good use, documentation and code comments:

AI is phenomenal at explaining code in plain English. I use it constantly to write README files and inline comments for my own future self.

🌐 The Community Moderation Question

Is "Vibe Code Friday" the right call? Yeah, as a short-term signal it makes sense.

The r/selfhosted community exists to share knowledge about real systems and real problems. When the feed fills with AI-generated weekend projects that haven't survived 48 hours of real traffic, the signal-to-noise ratio collapses.

What I'd actually want to see is a "Battle Tested" flair. A community signal that a project has been running in someone's real environment for 90+ days. That's more useful than restricting which day you can post, but moderation tools are blunt instruments, and I get why they went there.

🧠 Lessons Learned

- AI is a multiplier, not a replacement for understanding. Use it to go faster on things you already know.

- The debugging session is non-negotiable. If you've never broken your stack and fixed it manually, you don't own it. You're renting it from the AI.

- Homelab means you're the SRE. When it breaks at 3 AM there's no ticket to file.

- Vibe coding isn't the enemy. Skipped comprehension is. I generate code with AI every day. I just read it first.

- The community backlash is worth hearing. r/selfhosted is tired of surface-level projects. Build deeper. Share the hard lessons, not just the wins.

If you're using AI to build something real, show your work. Share the part where it broke and what you learned. That's what makes a homelab post worth reading.